pruning1100lu.com

1100lu.com 时间:2021-03-22 阅读:()

[Typetext][Typetext][Typetext]2014TradeScienceInc.

ISSN:0974-7435Volume10Issue24BioTechnologyAnIndianJournalFULLPAPERBTAIJ,10(24),2014[16338-16346]ApplicationresearchofdecisiontreealgorithminenglishgradeanalysisZhaoKunBeihuaUniversity,Teacher'scollege,Jilin,(CHINA)ABSTRACTThispaperintroducesandanalysesthedatamininginthemanagementofstudents'grades.

Weusethedecisiontreeinanalysisofgradesandinvestigateattributeselectionmeasureincludingdatacleaning.

WetakecoursescoreofinstituteofEnglishlanguageforexampleandproducedecisiontreeusingID3algorithmwhichgivesthedetailedcalculationprocess.

Becausetheoriginalalgorithmlacksterminationcondition,weproposeanimprovedalgorithmwhichcanhelpustofindthelatencyfactorwhichimpactsthegrades.

KEYWORDSDecisiontreealgorithm;Englishgradeanalysis;ID3algorithm;Classification.

BTAIJ,10(24)2014ZhaoKun16339INTRODUCTIONWiththerapiddevelopmentofhighereducation,EnglishgradeanalysisasanimportantguaranteeforthescientificmanagementconstitutesthemainpartoftheEnglisheducationalassessment.

Theresearchonapplicationofdatamininginmanagementofstudents'gradeswantstotalkhowtogettheusefuluncoveredinformationfromthelargeamountsofdatawiththedataminingandgrademanagement[1-5].

Itintroducesandanalysesthedatamininginthemanagementofstudents'grades.

Itusesthedecisiontreeinanalysisofgrades.

Itdescribesthefunction,statusanddeficiencyofthemanagementofstudents'grades.

Ittellsushowtoemploythedecisiontreeinmanagementofstudents'grades.

ItimprovestheID3arithmetictoanalyzethestudents'gradessothatwecouldfindthelatencyfactorwhichimpactsthegrades.

Ifwefindoutthefactors,wecanofferthedecision-makinginformationtoteachers.

Italsoadvancesthequalityofteaching[6-10].

TheEnglishgradeanalysishelpsteacherstoimprovetheteachingqualityandprovidesdecisionsforschoolleaders.

Thedecisiontree-basedclassificationmodeliswidelyusedasitsuniqueadvantage.

Firstly,thestructureofthedecisiontreemethodissimpleanditgeneratesruleseasytounderstand.

Secondly,thehighefficiencyofthedecisiontreemodelismoreappropriateforthecaseofalargeamountofdatainthetrainingset.

Furthermorethecomputationofthedecisiontreealgorithmisrelativelynotlarge.

Thedecisiontreemethodusuallydoesnotrequireknowledgeofthetrainingdata,andspecializesinthetreatmentofnon-numericdata.

Finally,thedecisiontreemethodhashighclassificationaccuracy,anditistoidentifycommoncharacteristicsoflibraryobjects,andclassifytheminaccordancewiththeclassificationmodel.

Theoriginaldecisiontreealgorithmusesthetop-downrecursiveway[11-12].

Comparisonofpropertyvaluesisdoneintheinternalnodesofthedecisiontreeandaccordingtothedifferentpropertyvaluesjudgedownbranchesfromthenode.

Wegetconclusionfromthedecisiontreeleafnode.

Therefore,apathfromtheroottotheleafnodecorrespondstoaconjunctiverules,theentiredecisiontreecorrespondstoasetofdisjunctiveexpressionsrules.

Thedecisiontreegenerationalgorithmisdividedintotwosteps[13-15].

Thefirststepisthegenerationofthetree,andatthebeginningallthedataisintherootnode,thendotherecursivedataslice.

Treepruningistoremovesomeofthenoiseorabnormaldata.

Conditionsofdecisiontreetostopsplittingisthatanodedatabelongstothesamecategoryandtherearenotattributesusedtosplitthedata.

Inthenextsection,weintroduceconstructionofdecisiontree.

InSection3weintroduceattributeselectionmeasure.

InSection4,wedoempiricalresearchbasedonID3algorithmandproposeanimprovedalgorithm.

InSection5weconcludethepaperandgivesomeremarks.

CONSTRUCTIONOFDECISIONTREEUSINGID3ThegrowingstepofthedecisiontreeisshowninFigure1.

Decisiontreegenerationalgorithmisdescribedasfollows.

Thenameofthealgorithmis__Generatedecisiontreewhichproduceadecisiontreebygiventrainingdata.

Theinputistrainingsampleswhichisrepresentedwithdiscretevalues.

Candidateattributesetisattribute.

Theoutputisadecisiontree.

Step1.

SetupnodeN.

IfsamplesisinasameclassCthenreturnNasleadnodeandlabelitwithC.

Step2.

Ifattribute_listisempty,thenreturnNasleafnodeandlabelitwiththemostcommonclassinthesamples.

Step3.

Choose_testattributewithinformationgainintheattribute_list,andlabelNas_testattribute.

Step4.

Whileeachiainevery_testattributedothefollowingoperation.

Step5.

NodeNproducesabranchwhichmeetstheconditionof_itestattributeaStep6.

Supposeisissamplesetof_itestattributeainthesamples.

Ifisisempty,thenplusaleafandlabelitasthemostcommonclass.

OtherwiseplusanodewhichwasreturnedbyiGeneratedecisiontreesattributelisttestattribute.

16340ApplicationresearchofdecisiontreealgorithminenglishgradeanalysisBTAIJ,10(24)2014Figure1:GrowingstepofthedecisiontreeANIMPROVEDALGORITHMAttributeselectionmeasureSupposeSisdatasamplesetofsnumberandclasslabelattributehasmdifferentvalues(1,2,,)iCim.

SupposeiSisthenumberofsampleofclassiCinS.

Foragivensampleclassificationthedemandedexpectationinformationisgivenbyformula1[11-12].

1221log(1,2,,,)mjjmjijijiIssKsppiKn(1)12121()VjjmjjjmjjSSSEAISSKSS(2)ipisprobabilitythatrandomsamplebelongstoiCandisestimatedby/iss.

SupposeattributeAhasVdifferentvalues12Vaaa.

WecanuseattributeAtoclassifySintoVnumberofsubset12(,,)VSSS.

SupposeijSisthenumberofclassiCinsubsetjS.

Theexpectedinformationofsubsetisshowninformula2.

12()jjmjSSSSistheweightofthej-thsubset.

ForagivensubsetjSformula3setsup[13].

1221log(1,2,,,)mjjmjijijiIssKsppiKn(3)BTAIJ,10(24)2014ZhaoKun16341ijijjspsistheprobabilitythatsamplesofjsbelongstoclassiC.

IfwebranchinA,theinformationgainisshowninformula4[14].

12mGainAIsssEA(4)TheimprovedalgorithmTheimprovedalgorithmisasfollows.

Function__Generatedecisiontree(trainingsamples,candidateattributeattribute_list){SetupnodeN;IfsamplesareinthesameclassCthenReturnNasleafnodeandlabelitwithC;Recordstatisticaldatameetingtheconditionsontheleafnode;Ifattribute_listisemptythenReturnNastheleafnodeandlabelitasthemostcommonclassofsamples;Recordstatisticaldatameetingtheconditionsontheleafnode;SupposeGainMax=max(Gain1,Gain2,…,Gainn)IfGainMax='85'Updatekssetci_pi='medium'whereci_pj>='75'andci_pj='60'andci_pj<'75'Updatekssetsjnd='high'wheresjnd='1'Updatekssetsjnd='medium'wheresjnd='2'Updatekssetsjnd='low'wheresjnd='3'ResultofID3algorithmTABLE2istrainingsetofstudenttestscoressituationinformationafterdatacleaning.

Weclassifythesamplesintothreecategories.

1"outstanding"C,2"medium"C,3"general"C,1300,s21950s,3880s,3130s.

Accordingtoformula1,weobtain123300,1950,880)(300/3130)Isss2/log(300/3130).

22(1950/3130)log(1950/3130)(880/3130)log(880/3130)1.

256003.

Entropyofeveryattributeiscalculatedasfollows.

Firstlycalculatewhetherre-learning.

Foryes,11210s,21950s,31580s.

112131210,950,580)Isss222(210/1740)log(210/1740)(950/1740)log(950/1740)(580/1740)log(580/1740)1.

074901Forno,1290s,221000s,32300s.

12223290,1000,300)Isss222(90/1390)log(90/1390)(1000/1390)log(1000/1390)(300/1390)log(300/1390)1.

373186.

IFsamplesareclassifiedaccordingtowhetherre-learning,theexpectedinformationis1121311222321740/3130)1390/3130)EwhetherrelearningIsssIsss0.

5559111.

0749010.

4440891.

3731861.

240721.

Sotheinformationgainis1230.

015282GainwhetherrelearningIsssEwhetherrelearning.

Secondlycalculatecoursetype,whenitisA,112131110,200,580sss.

112131222110,200,580)(110/890)log(110/890)(200/890)log(200/890)(580/890)log(580/890)Isss1.

259382.

ForcoursetypeB,122232100,400,0sss.

BTAIJ,10(24)2014ZhaoKun1634312223222100,400,0)(100/500)log(100/500)(400/500)log(400/500)0Isss0.

721928.

ForcoursetypeC,1323330,550,0sss.

132333220,550,0)(0/550)log(0/550)(550/500)log(550/500)0Isss1.

168009.

ForcoursetypeD,14243490,800,300sss.

14243422290,800,300)(90/1190)log(90/1190)(800/1190)log(800/1190)(300/1190)log(300/1190)Isss1.

168009.

112131122232("")(890/3130)500/3130)EcoursetypeIsssIsss132333142434(550/3130)1190/3130)0.

91749.

IsssIsss("")1.

2560030.

917490.

338513Gaincoursetype.

Thirdlycalculatepaperdifficulty.

Forhigh,112131110,900,280sss.

112131222110,900,280)(110/1290)log(110/1290)(900/1290)log(900/1290)(280/1290)log(280/1290)Isss1.

14385.

Formedium,122232190,700,300sss.

122232222190,700,300)(190/1190)log(190/1190)(700/1190)log(700/1190)(300/1190)log(300/1190)Isss1.

374086Forlow,1323330,350,300sss.

1323332220,350,300)(0/650)log(0/650)(350/650)log(350/650)(300/650)log(300/650)0.

995727.

Isss112131122232("")(1290/3130)1190/3130)EpaperdifficultyIsssIsss132333(650/3130)1.

200512.

Isss("")1.

2560031.

2005120.

55497.

GainpaperdifficultyFourthlycalculatewhetherrequiredcourse.

Foryes,112131210,850,600sss16344ApplicationresearchofdecisiontreealgorithminenglishgradeanalysisBTAIJ,10(24)2014112131222210,850,600)(210/1660)log(210/1660)(850/1660)log(850/1660)(600/1660)log(600/1660)Isss1.

220681.

Forno,12223290,1100,280sss12223222290,1100,280)(90/1470)log(90/1470)(1100/1470)log(1100/1470)(280/1470)log(280/1470)Isss1.

015442.

112131122232("")(1660/3130)1470/3130)1.

220681.

EwhetherrequiredIsssIsss("")1.

2560031.

2206810.

035322.

GainwhetherrequiredTABLE2:TrainingsetofstudenttestscoresCoursetypeWhetherre-learningPaperdifficultyWhetherrequiredScoreStatisticaldataDnomediumnooutstanding90Byesmediumyesoutstanding100Ayeshighyesmedium200Dnolownomedium350Cyesmediumyesgeneral300Ayeshighnomedium250Bnohighnomedium300Ayeshighyesoutstanding110Dyesmediumyesmedium500Dnolowyesgeneral300Ayeshighnogeneral280Bnohighyesmedium150Cnomediumnomedium200ResultofimprovedalgorithmTheoriginalalgorithmlacksterminationcondition.

ThereareonlytworecordsforasubtreetobeclassifiedwhichisshowninTABLE3.

TABLE3:SpecialcaseforclassificationofthesubtreeCoursetypeWhetherre-learningPaperdifficultyWhetherrequiredScoreStatisticaldataAnohighyesmedium15Anohighyesgeneral20BTAIJ,10(24)2014ZhaoKun16345Figure2:DecisiontreeusingimprovedalgorithmAllGainscalculatedare0.

00,andGainMax=0.

00whichdoesnotconformtorecursiveterminationconditionoftheoriginalalgorithminTABLE3.

Thetreeobtainedisnotreasonable,soweadopttheimprovedalgorithmanddecisiontreeusingimprovedalgorithmisshowninFigure2.

CONCLUSIONSInthispaperwestudyconstructionofdecisiontreeandattributeselectionmeasure.

Becausetheoriginalalgorithmlacksterminationcondition,weproposeanimprovedalgorithm.

WetakecoursescoreofinstituteofEnglishlanguageforexampleandwecouldfindthelatencyfactorwhichimpactsthegrades.

REFERENCES[1]XueleiXu,ChunweiLou;"ApplyingDecisionTreeAlgorithmsinEnglishVocabularyTestItemSelection",IJACT:InternationalJournalofAdvancementsinComputingTechnology,4(4),165-173(2012).

[2]HuaweiZhang;"LazyDecisionTreeMethodforDistributedPrivacyPreservingDataMining",IJACT:InternationalJournalofAdvancementsinComputingTechnology,4(14),458-465(2012).

[3]Xin-huaZhu,Jin-lingZhang,Jiang-taoLu;"AnEducationDecisionSupportSystemBasedonDataMiningTechnology",JDCTA:InternationalJournalofDigitalContentTechnologyanditsApplications,6(23),354-363(2012).

[4]ZhenLiu,XianFengYang;"Anapplicationmodeloffuzzyclusteringanalysisanddecisiontreealgorithmsinbuildingwebmining",JDCTA:InternationalJournalofDigitalContentTechnologyanditsApplications,6(23),492-500(2012).

[5]Guang-xianJi;"Theresearchofdecisiontreelearningalgorithmintechnologyofdataminingclassification",JCIT:JournalofConvergenceInformationTechnology,7(10),216-223(2012).

[6]FuxianHuang;"ResearchofanAlgorithmforGeneratingCost-SensitiveDecisionTreeBasedonAttributeSignificance",JDCTA:InternationalJournalofDigitalContentTechnologyanditsApplications,6(12),308-316(2012).

[7]M.

SudheepElayidom,SumamMaryIdikkula,JosephAlexander;"DesignandPerformanceanalysisofDataminingtechniquesBasedonDecisiontreesandNaiveBayesclassifierFor",JCIT:JournalofConvergenceInformationTechnology,6(5),89-98(2011).

[8]MarjanBahrololum,ElhamSalahi,MahmoudKhaleghi;"AnImprovedIntrusionDetectionTechniquebasedontwoStrategiesUsingDecisionTreeandNeuralNetwork",JCIT:JournalofConvergenceInformationTechnology,4(4),96-101(2009).

[9]Bor-tyngWang,Tian-WeiSheu,Jung-ChinLiang,Jian-WeiTzeng,NagaiMasatake;"TheStudyofSoftComputingontheFieldofEnglishEducation:ApplyingGreyS-PChartinEnglishWritingAssessment",JDCTA:InternationalJournalofDigitalContentTechnologyanditsApplications,5(9),379-388(2011).

[10]MohamadFarhanMohamadMohsin,MohdHelmyAbdWahab,MohdFairuzZaiyadi,CikFazilahHibadullah;"AnInvestigationintoInfluenceFactorofStudentProgrammingGradeUsingAssociationRuleMining",AISS:AdvancesinInformationSciencesandServiceSciences,2(2),19-27(2010).

16346ApplicationresearchofdecisiontreealgorithminenglishgradeanalysisBTAIJ,10(24)2014[11]HaoXin;"AssessmentandAnalysisofHierarchicalandProgressiveBilingualEnglishEducationBasedonNeuro-Fuzzyapproach",AISS:AdvancesinInformationSciencesandServiceSciences,5(1),269-276(2013).

[12]Hong-chaoChen,Jin-lingZhang,Ya-qiongDeng;"ApplicationofMixed-Weighted-Association-Rules-BasedDataMiningTechnologyinCollegeExaminationgradesAnalysis",JDCTA:InternationalJournalofDigitalContentTechnologyanditsApplications,6(10),336-344(2012).

[13]YuanWang,LanZheng;"EndocrineHormonesAssociationRulesMiningBasedonImprovedAprioriAlgorithm",JCIT:JournalofConvergenceInformationTechnology,7(7),72-82(2012).

[14]TianBai,JinchaoJi,ZheWang,ChunguangZhou;"ApplicationofaGlobalCategoricalDataClusteringMethodinMedicalDataAnalysis",AISS:AdvancesinInformationSciencesandServiceSciences,4(7),182-190(2012).

[15]HongYanMei,YanWang,JunZhou;"DecisionRulesExtractionBasedonNecessaryandSufficientStrengthandClassificationAlgorithm",AISS:AdvancesinInformationSciencesandServiceSciences,4(14),441-449(2012).

[16]LiuYong;"TheBuildingofDataMiningSystemsbasedonTransactionDataMiningLanguageusingJava",JDCTA:InternationalJournalofDigitalContentTechnologyanditsApplications,6(14),298-305(2012).

ISSN:0974-7435Volume10Issue24BioTechnologyAnIndianJournalFULLPAPERBTAIJ,10(24),2014[16338-16346]ApplicationresearchofdecisiontreealgorithminenglishgradeanalysisZhaoKunBeihuaUniversity,Teacher'scollege,Jilin,(CHINA)ABSTRACTThispaperintroducesandanalysesthedatamininginthemanagementofstudents'grades.

Weusethedecisiontreeinanalysisofgradesandinvestigateattributeselectionmeasureincludingdatacleaning.

WetakecoursescoreofinstituteofEnglishlanguageforexampleandproducedecisiontreeusingID3algorithmwhichgivesthedetailedcalculationprocess.

Becausetheoriginalalgorithmlacksterminationcondition,weproposeanimprovedalgorithmwhichcanhelpustofindthelatencyfactorwhichimpactsthegrades.

KEYWORDSDecisiontreealgorithm;Englishgradeanalysis;ID3algorithm;Classification.

BTAIJ,10(24)2014ZhaoKun16339INTRODUCTIONWiththerapiddevelopmentofhighereducation,EnglishgradeanalysisasanimportantguaranteeforthescientificmanagementconstitutesthemainpartoftheEnglisheducationalassessment.

Theresearchonapplicationofdatamininginmanagementofstudents'gradeswantstotalkhowtogettheusefuluncoveredinformationfromthelargeamountsofdatawiththedataminingandgrademanagement[1-5].

Itintroducesandanalysesthedatamininginthemanagementofstudents'grades.

Itusesthedecisiontreeinanalysisofgrades.

Itdescribesthefunction,statusanddeficiencyofthemanagementofstudents'grades.

Ittellsushowtoemploythedecisiontreeinmanagementofstudents'grades.

ItimprovestheID3arithmetictoanalyzethestudents'gradessothatwecouldfindthelatencyfactorwhichimpactsthegrades.

Ifwefindoutthefactors,wecanofferthedecision-makinginformationtoteachers.

Italsoadvancesthequalityofteaching[6-10].

TheEnglishgradeanalysishelpsteacherstoimprovetheteachingqualityandprovidesdecisionsforschoolleaders.

Thedecisiontree-basedclassificationmodeliswidelyusedasitsuniqueadvantage.

Firstly,thestructureofthedecisiontreemethodissimpleanditgeneratesruleseasytounderstand.

Secondly,thehighefficiencyofthedecisiontreemodelismoreappropriateforthecaseofalargeamountofdatainthetrainingset.

Furthermorethecomputationofthedecisiontreealgorithmisrelativelynotlarge.

Thedecisiontreemethodusuallydoesnotrequireknowledgeofthetrainingdata,andspecializesinthetreatmentofnon-numericdata.

Finally,thedecisiontreemethodhashighclassificationaccuracy,anditistoidentifycommoncharacteristicsoflibraryobjects,andclassifytheminaccordancewiththeclassificationmodel.

Theoriginaldecisiontreealgorithmusesthetop-downrecursiveway[11-12].

Comparisonofpropertyvaluesisdoneintheinternalnodesofthedecisiontreeandaccordingtothedifferentpropertyvaluesjudgedownbranchesfromthenode.

Wegetconclusionfromthedecisiontreeleafnode.

Therefore,apathfromtheroottotheleafnodecorrespondstoaconjunctiverules,theentiredecisiontreecorrespondstoasetofdisjunctiveexpressionsrules.

Thedecisiontreegenerationalgorithmisdividedintotwosteps[13-15].

Thefirststepisthegenerationofthetree,andatthebeginningallthedataisintherootnode,thendotherecursivedataslice.

Treepruningistoremovesomeofthenoiseorabnormaldata.

Conditionsofdecisiontreetostopsplittingisthatanodedatabelongstothesamecategoryandtherearenotattributesusedtosplitthedata.

Inthenextsection,weintroduceconstructionofdecisiontree.

InSection3weintroduceattributeselectionmeasure.

InSection4,wedoempiricalresearchbasedonID3algorithmandproposeanimprovedalgorithm.

InSection5weconcludethepaperandgivesomeremarks.

CONSTRUCTIONOFDECISIONTREEUSINGID3ThegrowingstepofthedecisiontreeisshowninFigure1.

Decisiontreegenerationalgorithmisdescribedasfollows.

Thenameofthealgorithmis__Generatedecisiontreewhichproduceadecisiontreebygiventrainingdata.

Theinputistrainingsampleswhichisrepresentedwithdiscretevalues.

Candidateattributesetisattribute.

Theoutputisadecisiontree.

Step1.

SetupnodeN.

IfsamplesisinasameclassCthenreturnNasleadnodeandlabelitwithC.

Step2.

Ifattribute_listisempty,thenreturnNasleafnodeandlabelitwiththemostcommonclassinthesamples.

Step3.

Choose_testattributewithinformationgainintheattribute_list,andlabelNas_testattribute.

Step4.

Whileeachiainevery_testattributedothefollowingoperation.

Step5.

NodeNproducesabranchwhichmeetstheconditionof_itestattributeaStep6.

Supposeisissamplesetof_itestattributeainthesamples.

Ifisisempty,thenplusaleafandlabelitasthemostcommonclass.

OtherwiseplusanodewhichwasreturnedbyiGeneratedecisiontreesattributelisttestattribute.

16340ApplicationresearchofdecisiontreealgorithminenglishgradeanalysisBTAIJ,10(24)2014Figure1:GrowingstepofthedecisiontreeANIMPROVEDALGORITHMAttributeselectionmeasureSupposeSisdatasamplesetofsnumberandclasslabelattributehasmdifferentvalues(1,2,,)iCim.

SupposeiSisthenumberofsampleofclassiCinS.

Foragivensampleclassificationthedemandedexpectationinformationisgivenbyformula1[11-12].

1221log(1,2,,,)mjjmjijijiIssKsppiKn(1)12121()VjjmjjjmjjSSSEAISSKSS(2)ipisprobabilitythatrandomsamplebelongstoiCandisestimatedby/iss.

SupposeattributeAhasVdifferentvalues12Vaaa.

WecanuseattributeAtoclassifySintoVnumberofsubset12(,,)VSSS.

SupposeijSisthenumberofclassiCinsubsetjS.

Theexpectedinformationofsubsetisshowninformula2.

12()jjmjSSSSistheweightofthej-thsubset.

ForagivensubsetjSformula3setsup[13].

1221log(1,2,,,)mjjmjijijiIssKsppiKn(3)BTAIJ,10(24)2014ZhaoKun16341ijijjspsistheprobabilitythatsamplesofjsbelongstoclassiC.

IfwebranchinA,theinformationgainisshowninformula4[14].

12mGainAIsssEA(4)TheimprovedalgorithmTheimprovedalgorithmisasfollows.

Function__Generatedecisiontree(trainingsamples,candidateattributeattribute_list){SetupnodeN;IfsamplesareinthesameclassCthenReturnNasleafnodeandlabelitwithC;Recordstatisticaldatameetingtheconditionsontheleafnode;Ifattribute_listisemptythenReturnNastheleafnodeandlabelitasthemostcommonclassofsamples;Recordstatisticaldatameetingtheconditionsontheleafnode;SupposeGainMax=max(Gain1,Gain2,…,Gainn)IfGainMax='85'Updatekssetci_pi='medium'whereci_pj>='75'andci_pj='60'andci_pj<'75'Updatekssetsjnd='high'wheresjnd='1'Updatekssetsjnd='medium'wheresjnd='2'Updatekssetsjnd='low'wheresjnd='3'ResultofID3algorithmTABLE2istrainingsetofstudenttestscoressituationinformationafterdatacleaning.

Weclassifythesamplesintothreecategories.

1"outstanding"C,2"medium"C,3"general"C,1300,s21950s,3880s,3130s.

Accordingtoformula1,weobtain123300,1950,880)(300/3130)Isss2/log(300/3130).

22(1950/3130)log(1950/3130)(880/3130)log(880/3130)1.

256003.

Entropyofeveryattributeiscalculatedasfollows.

Firstlycalculatewhetherre-learning.

Foryes,11210s,21950s,31580s.

112131210,950,580)Isss222(210/1740)log(210/1740)(950/1740)log(950/1740)(580/1740)log(580/1740)1.

074901Forno,1290s,221000s,32300s.

12223290,1000,300)Isss222(90/1390)log(90/1390)(1000/1390)log(1000/1390)(300/1390)log(300/1390)1.

373186.

IFsamplesareclassifiedaccordingtowhetherre-learning,theexpectedinformationis1121311222321740/3130)1390/3130)EwhetherrelearningIsssIsss0.

5559111.

0749010.

4440891.

3731861.

240721.

Sotheinformationgainis1230.

015282GainwhetherrelearningIsssEwhetherrelearning.

Secondlycalculatecoursetype,whenitisA,112131110,200,580sss.

112131222110,200,580)(110/890)log(110/890)(200/890)log(200/890)(580/890)log(580/890)Isss1.

259382.

ForcoursetypeB,122232100,400,0sss.

BTAIJ,10(24)2014ZhaoKun1634312223222100,400,0)(100/500)log(100/500)(400/500)log(400/500)0Isss0.

721928.

ForcoursetypeC,1323330,550,0sss.

132333220,550,0)(0/550)log(0/550)(550/500)log(550/500)0Isss1.

168009.

ForcoursetypeD,14243490,800,300sss.

14243422290,800,300)(90/1190)log(90/1190)(800/1190)log(800/1190)(300/1190)log(300/1190)Isss1.

168009.

112131122232("")(890/3130)500/3130)EcoursetypeIsssIsss132333142434(550/3130)1190/3130)0.

91749.

IsssIsss("")1.

2560030.

917490.

338513Gaincoursetype.

Thirdlycalculatepaperdifficulty.

Forhigh,112131110,900,280sss.

112131222110,900,280)(110/1290)log(110/1290)(900/1290)log(900/1290)(280/1290)log(280/1290)Isss1.

14385.

Formedium,122232190,700,300sss.

122232222190,700,300)(190/1190)log(190/1190)(700/1190)log(700/1190)(300/1190)log(300/1190)Isss1.

374086Forlow,1323330,350,300sss.

1323332220,350,300)(0/650)log(0/650)(350/650)log(350/650)(300/650)log(300/650)0.

995727.

Isss112131122232("")(1290/3130)1190/3130)EpaperdifficultyIsssIsss132333(650/3130)1.

200512.

Isss("")1.

2560031.

2005120.

55497.

GainpaperdifficultyFourthlycalculatewhetherrequiredcourse.

Foryes,112131210,850,600sss16344ApplicationresearchofdecisiontreealgorithminenglishgradeanalysisBTAIJ,10(24)2014112131222210,850,600)(210/1660)log(210/1660)(850/1660)log(850/1660)(600/1660)log(600/1660)Isss1.

220681.

Forno,12223290,1100,280sss12223222290,1100,280)(90/1470)log(90/1470)(1100/1470)log(1100/1470)(280/1470)log(280/1470)Isss1.

015442.

112131122232("")(1660/3130)1470/3130)1.

220681.

EwhetherrequiredIsssIsss("")1.

2560031.

2206810.

035322.

GainwhetherrequiredTABLE2:TrainingsetofstudenttestscoresCoursetypeWhetherre-learningPaperdifficultyWhetherrequiredScoreStatisticaldataDnomediumnooutstanding90Byesmediumyesoutstanding100Ayeshighyesmedium200Dnolownomedium350Cyesmediumyesgeneral300Ayeshighnomedium250Bnohighnomedium300Ayeshighyesoutstanding110Dyesmediumyesmedium500Dnolowyesgeneral300Ayeshighnogeneral280Bnohighyesmedium150Cnomediumnomedium200ResultofimprovedalgorithmTheoriginalalgorithmlacksterminationcondition.

ThereareonlytworecordsforasubtreetobeclassifiedwhichisshowninTABLE3.

TABLE3:SpecialcaseforclassificationofthesubtreeCoursetypeWhetherre-learningPaperdifficultyWhetherrequiredScoreStatisticaldataAnohighyesmedium15Anohighyesgeneral20BTAIJ,10(24)2014ZhaoKun16345Figure2:DecisiontreeusingimprovedalgorithmAllGainscalculatedare0.

00,andGainMax=0.

00whichdoesnotconformtorecursiveterminationconditionoftheoriginalalgorithminTABLE3.

Thetreeobtainedisnotreasonable,soweadopttheimprovedalgorithmanddecisiontreeusingimprovedalgorithmisshowninFigure2.

CONCLUSIONSInthispaperwestudyconstructionofdecisiontreeandattributeselectionmeasure.

Becausetheoriginalalgorithmlacksterminationcondition,weproposeanimprovedalgorithm.

WetakecoursescoreofinstituteofEnglishlanguageforexampleandwecouldfindthelatencyfactorwhichimpactsthegrades.

REFERENCES[1]XueleiXu,ChunweiLou;"ApplyingDecisionTreeAlgorithmsinEnglishVocabularyTestItemSelection",IJACT:InternationalJournalofAdvancementsinComputingTechnology,4(4),165-173(2012).

[2]HuaweiZhang;"LazyDecisionTreeMethodforDistributedPrivacyPreservingDataMining",IJACT:InternationalJournalofAdvancementsinComputingTechnology,4(14),458-465(2012).

[3]Xin-huaZhu,Jin-lingZhang,Jiang-taoLu;"AnEducationDecisionSupportSystemBasedonDataMiningTechnology",JDCTA:InternationalJournalofDigitalContentTechnologyanditsApplications,6(23),354-363(2012).

[4]ZhenLiu,XianFengYang;"Anapplicationmodeloffuzzyclusteringanalysisanddecisiontreealgorithmsinbuildingwebmining",JDCTA:InternationalJournalofDigitalContentTechnologyanditsApplications,6(23),492-500(2012).

[5]Guang-xianJi;"Theresearchofdecisiontreelearningalgorithmintechnologyofdataminingclassification",JCIT:JournalofConvergenceInformationTechnology,7(10),216-223(2012).

[6]FuxianHuang;"ResearchofanAlgorithmforGeneratingCost-SensitiveDecisionTreeBasedonAttributeSignificance",JDCTA:InternationalJournalofDigitalContentTechnologyanditsApplications,6(12),308-316(2012).

[7]M.

SudheepElayidom,SumamMaryIdikkula,JosephAlexander;"DesignandPerformanceanalysisofDataminingtechniquesBasedonDecisiontreesandNaiveBayesclassifierFor",JCIT:JournalofConvergenceInformationTechnology,6(5),89-98(2011).

[8]MarjanBahrololum,ElhamSalahi,MahmoudKhaleghi;"AnImprovedIntrusionDetectionTechniquebasedontwoStrategiesUsingDecisionTreeandNeuralNetwork",JCIT:JournalofConvergenceInformationTechnology,4(4),96-101(2009).

[9]Bor-tyngWang,Tian-WeiSheu,Jung-ChinLiang,Jian-WeiTzeng,NagaiMasatake;"TheStudyofSoftComputingontheFieldofEnglishEducation:ApplyingGreyS-PChartinEnglishWritingAssessment",JDCTA:InternationalJournalofDigitalContentTechnologyanditsApplications,5(9),379-388(2011).

[10]MohamadFarhanMohamadMohsin,MohdHelmyAbdWahab,MohdFairuzZaiyadi,CikFazilahHibadullah;"AnInvestigationintoInfluenceFactorofStudentProgrammingGradeUsingAssociationRuleMining",AISS:AdvancesinInformationSciencesandServiceSciences,2(2),19-27(2010).

16346ApplicationresearchofdecisiontreealgorithminenglishgradeanalysisBTAIJ,10(24)2014[11]HaoXin;"AssessmentandAnalysisofHierarchicalandProgressiveBilingualEnglishEducationBasedonNeuro-Fuzzyapproach",AISS:AdvancesinInformationSciencesandServiceSciences,5(1),269-276(2013).

[12]Hong-chaoChen,Jin-lingZhang,Ya-qiongDeng;"ApplicationofMixed-Weighted-Association-Rules-BasedDataMiningTechnologyinCollegeExaminationgradesAnalysis",JDCTA:InternationalJournalofDigitalContentTechnologyanditsApplications,6(10),336-344(2012).

[13]YuanWang,LanZheng;"EndocrineHormonesAssociationRulesMiningBasedonImprovedAprioriAlgorithm",JCIT:JournalofConvergenceInformationTechnology,7(7),72-82(2012).

[14]TianBai,JinchaoJi,ZheWang,ChunguangZhou;"ApplicationofaGlobalCategoricalDataClusteringMethodinMedicalDataAnalysis",AISS:AdvancesinInformationSciencesandServiceSciences,4(7),182-190(2012).

[15]HongYanMei,YanWang,JunZhou;"DecisionRulesExtractionBasedonNecessaryandSufficientStrengthandClassificationAlgorithm",AISS:AdvancesinInformationSciencesandServiceSciences,4(14),441-449(2012).

[16]LiuYong;"TheBuildingofDataMiningSystemsbasedonTransactionDataMiningLanguageusingJava",JDCTA:InternationalJournalofDigitalContentTechnologyanditsApplications,6(14),298-305(2012).

- pruning1100lu.com相关文档

- dust1100lu.com

- performed1100lu.com

- carry1100lu.com

- Hove1100lu.com

- 移植1100lu.com

- 熔融1100lu.com

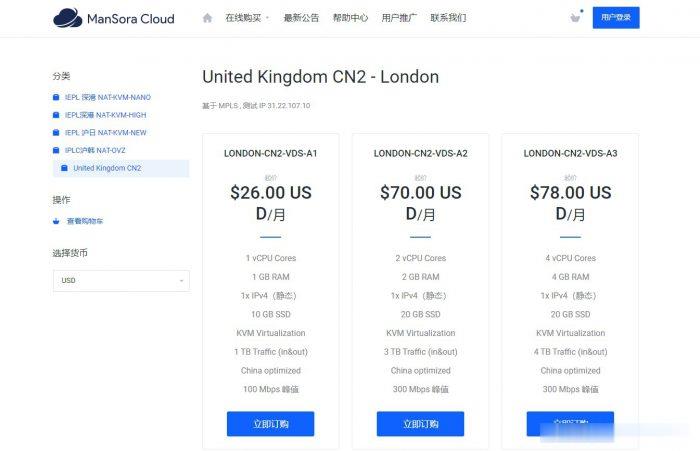

ManSora:英国CN2 VPS,1核/1GB内存/10GB SSD/1TB流量/100Mbps/KVM,$18.2/月

mansora怎么样?mansora是一家国人商家,主要提供沪韩IEPL、沪日IEPL、深港IEPL等专线VPS。现在新推出了英国CN2 KVM VPS,线路为AS4809 AS9929,可解锁 Netflix,并有永久8折优惠。英国CN2 VPS,$18.2/月/1GB内存/10GB SSD空间/1TB流量/100Mbps端口/KVM,有需要的可以关注一下。点击进入:mansora官方网站地址m...

DMIT:香港国际线路vps,1.5GB内存/20GB SSD空间/4TB流量/1Gbps/KVM,$9.81/月

DMIT怎么样?DMIT是一家美国主机商,主要提供KVM VPS、独立服务器等,主要提供香港CN2、洛杉矶CN2 GIA等KVM VPS,稳定性、网络都很不错。支持中文客服,可Paypal、支付宝付款。2020年推出的香港国际线路的KVM VPS,大带宽,适合中转落地使用。现在有永久9折优惠码:July-4-Lite-10OFF,季付及以上还有折扣,非 中国路由优化;AS4134,AS4837 均...

亚州云-美国Care云服务器,618大带宽美国Care年付云活动服务器,采用KVM架构,支持3天免费无理由退款!

官方网站:点击访问亚州云活动官网活动方案:地区:美国CERA(联通)CPU:1核(可加)内存:1G(可加)硬盘:40G系统盘+20G数据盘架构:KVM流量:无限制带宽:100Mbps(可加)IPv4:1个价格:¥128/年(年付为4折)购买:直达订购链接测试IP:45.145.7.3Tips:不满意三天无理由退回充值账户!地区:枣庄电信高防防御:100GCPU:8核(可加)内存:4G(可加)硬盘:...

1100lu.com为你推荐

-

12306崩溃12306网站显示异常,什么原因啊广东GDP破10万亿中国GDP10万亿,广东3万亿多。占了中国三分之一的经纪。如果,我是说如果。广东独立了。中国会有什甲骨文不满赔偿未签合同被辞退的赔偿www.522av.com现在怎样在手机上看AV336.com求那个网站 你懂得 1552517773@qqwww.55125.cnwww95599cn余额查询javmoo.com找下载JAV软件格式的网站5xoy.comhttp://www.5yau.com (舞与伦比),以前是这个地址,后来更新了,很长时间没玩了,谁知道现在的地址? 谢谢,百度指数词什么是百度指数m88.comm88.com现在的官方网址是哪个啊 ?m88.com分析软件?